Minimal set-up for serving EXLV2-formatted LLMs

I have been working on a side project with an LLM integration, which someday I will share more about. As part of this project, I have been experimenting with various LLM quantization formats and found the Qwen 2.5 14B, quantized to 8 bits, offers an excellent balance of speed and performance on my 3090 RTX. Using the ExLlamaV2 format, I can achieve throughput of 40-55 token/second with an context window of 1-2 thousand tokens on my 3090 RTX (I haven’t needed larger context windows so far). The performance is impressive once the generation parameters are finet-tuned, although occasionally the model will start burping Chinese characters. Zoyd’s quantized version is the implementation I have been deploying lately.

I may write a separate post comparing EXLV2 to other popular formats, such as GGUF, on my hardware. For now, I wanted to share the source code I’ve written for an API endpoint. tabbyAPI is a solid option, but I wanted something more tailored to my needs. Important to note that I only require a single LLM response and am not currently using the HuggingFace (transformers) chat template, though I plan to extend the functionality in the future.

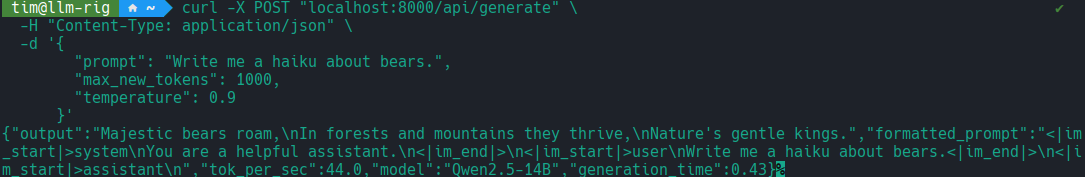

Here is a screenshot of a sample response.

For complete details on getting set-up, refer to the project home page at https://github.com/Tim-Roy/exl2-server.git. To get started you will need:

- An NVIDA GPU with sufficient RAM

- Linux environment

- ExLlamaV2

- flash attention

- An ExLlamaV2 quantized LLM

Once installed, set the environment variable MODEL_HOME to the path for the parent directory of the models you wish to deploy.

Next, copy the models.yaml configuration file to MODEL_HOME, and make any necessary changes.

Then, set EXL2_MODEL to one of the keys in models.yaml.

Finally deploy the server using uvicorn (for example, uvicorn exl2.server:app --host 0.0.0.0 --port 8000)

Now test the endpoint and have fun!

curl -X POST "localhost:8000/api/generate" \

-H "Content-Type: application/json" \

-d '{

"prompt": "Write me a haiku about bears.",

"max_new_tokens": 1000,

"temperature": 0.9

}'